In the beginning of May 2015, webmasters far and wide witnessed severe traffic fluctuations, with some sites losing over 50% of their traffic! Clearly, Google had changed something, but what? Because of the initial uncertainty, it was called the “Phantom Update” (II). In this post, I’ll provide you with Searchmetrics data to shed more light on the return of the Phantom.

It didn’t take long for members of the industry to start putting together their own research and explanations. Digital marketing veteran, Glenn Gabe, conducted extensive research on the update, naming it the “Phantom Update II”, based on a similar development he had seen in May 2013 (exactly 2 years ago).

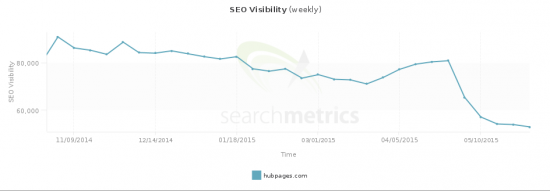

Co-founder of HubPages, Paul Edmondson, wrote about how his website allegedly* lost 22% of traffic because of this update. Eventually, Barry Schwartz of Search Engine Land received an official confirmation from Google about the update in mid-May, proving once and for all that we weren’t just imagining ghosts!

This is huge. To help you understand Phantom II and its potential power, we’ve scanned our database to present you the winners and losers of this update, and an analysis of Phantom II’s main changes. (Plus: *In this article, I’ll show you how much they REALLY lost.)

Check your Visibility with Searchmetrics Suite Software

Want to check your SEO Visibility? You don’t even need a login to get your current performance as well as information about this week’s winners and losers and Desktop vs. Mobile Top Ten.

Check your SEO Visiblity with Searchmetrics Suite

Phantom vs. Quality Update vs. Reverse Panda

The update was first named “Phantom II”, then “Quality Update,” and then most recently, “Reverse Panda.” To avoid confusion, I will be using the name “Phantom II” because the update has unique features that make it more than just “Reverse Panda,” and because “Panda” was also a “Quality Update.”

Before I dive into the winners and losers, let’s first take a step back and look at the facts we have about Phantom II so far.

- This update is a quality filter that has been integrated into the core ranking algorithm.

It changes “[…] how it processes quality signals.” It is not an update that has to be refreshed manually, so webmasters can expect to see changes at any time.

. - Phantom II operates on a page level.

This means, it will only affect low quality pages that have low rankings, not the entire domain. However, changes to these underperforming pages will indirectly impact the domain as a whole.

. - Many sites that have been affected by Phantom II were also affected by Panda.

This made a lot of people believe that this update was just a Panda refresh or that Panda had finally been integrated into the core algorithm, which has been one of Google’s long-term goals. The theory was further supported by the fact that Panda hadn’t been refreshed since October 2014. However, this is clearly not the situation since Google just announced that Panda will be refreshed within the next few weeks.

. - The update strongly impacted “how to” sites and pages.

This has less to do with the content on the sites, and more to do with how the sites function (a lot of user-generated content) and earn money (a lot of ads). I’ll dive into more detail later in this piece.

. - The update rolled out over several weeks.

Some sites saw decreases over three to four weeks, while others lost rankings overnight. The way that sites are losing traffic seems to be connected to the severity of their websites’ problems.

. - Many big brands were impacted negatively. It seems as if the brand bonus and brand authority are no longer enough to outrank other pages with higher quality/better content. That’s not to say there weren’t big brand winners though.

. - As with all Google updates, it’s difficult to draw a definite causal relationship between one website’s success and another’s failure.

In this case the impact seems to be two-fold; not only were some websites severely punished, deserving websites were also rewarded. This allows us to identify clear winners and losers, which has not always been the case with other Google updates.

According to our data, the initial implementation of Phantom II was clearly somewhere between April 26th and May 3rd. Based on information from other sources, as well as the fact that Google tends to roll out updates over the weekend, I’d say that Phantom II was released on the night of May 2nd.

So what triggers the update?

The answer becomes clear when we look at the negative qualifiers. Analyzing our data and looking at the winners/losers of this update gave us useful insights into why sites were being affected by Phantom II:

1. Ads above the fold and popups

Too many ads above the fold and annoying popups definitely have a negative impact.

Google supposedly measures how distracting these ads get by using user signals, such as bounce rate, time on site, and pages per visit.

If all that people see on your page are ads, Google might not like it very much. It seems that this factor is weighed more heavily than before.

There is a hierarchy of annoyance in terms of ads.

At the top are popups, and then all other ads, including banners, fall below that. Google might also take into account the ratio of legitimate content to ads on a page.

2. Self-starting videos

Self-starting videos have a similar impact as popups: they disturb the user experience. They definitely belong in the ad category, but I wanted to list them separately because their importance seems to have grown in the quality evaluation of a page.

Google knows the pain of having to click through all your tabs to find out where that mysterious music is coming from; Google also knows the pain of being disturbed by an auto-loading video when all you want to do is quietly read the content on a page.

That is why Google might punish self-starting videos in the future.

3. Duplicate content

Duplicate content is a definite no-no with Phantom II, especially when dealing with UGC (user generated content), which is often found on “how to” platforms. It can be difficult to identify how much of the content was copied from other sources and how much of it was original.

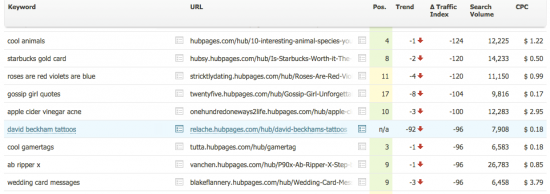

On HubPages for example, users can create their own “Hub” on a subdomain and write all kinds of content (mostly tutorials and explanations). But it is difficult, if not impossible, for HubPages to curate for original content on all of these hubs.

As you can see in this example, some parts of this Hub were copied from other sources (Pinterest, Facebook, and so on), which is a clear case of duplicate content.

Sometimes duplicate content is a harder issue to penalize since it can be difficult to determine who copied from whom. When we look at the ranking loss hubpages.com experienced after Phantom II, we see that this particular page sticks out with a loss of 92 positions!

Rottentomatoes.com is also a victim of duplicate content since movie descriptions on the site are often copied by masses of other high-authority sites.

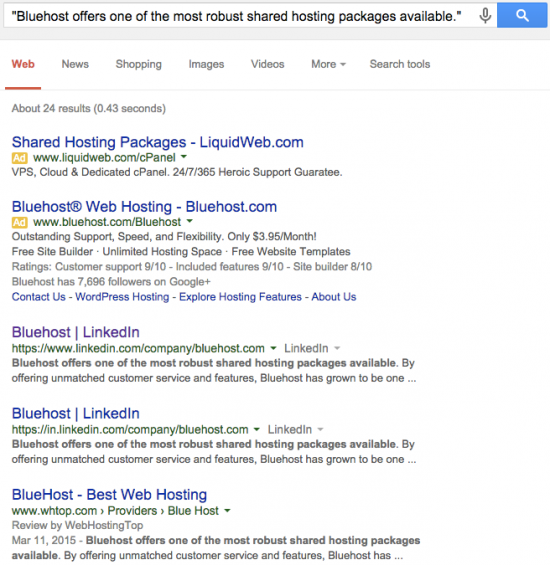

Another company in the duplicate content battle is LinkedIn. Their issue lies within their own domain where businesses create multiple pages in the company directory.

4. Poor content

Besides duplicate and thin content, poor content is another factor that can trigger Phantom II. Poor content can be copy that’s hard to read or does not really explain the topic.

Sometimes, a more detailed analysis (e.g. WDF * IDF) is necessary to identify off-topic pieces. This also is strongly linked to semantic content. Imagine writing about “tires” and not mentioning the word “car” once – how informative can that article really be?

5. Design

Design has a big influence on user experience. Content isn’t everything; looks play an important role, too! In my research, I’ve seen a small amount of sites with strong content but weak design that have lost more than 30% in rankings.

6. 404 Status Codes – Errors

.

404 and soft 404 errors are a problem, too.

They usually translate into a bad user experience, because they consist of either pages with no content or pages that no longer host the content someone is searching for.

An excessive amount of 404 errors is an indicator of a poorly maintained website.

7. Problems with Comments

Too many comments can also be a target of Phantom II. A deluge of comments can dilute the main content since we’re not yet sure if Google can distinguish comments from the main content and weigh them accordingly.

It is difficult to provide a clear threshold, but I’ve seen pages being punished for having over 60% of the content consist of comments, even though the main content consisted of more than 1,000 words. It seems that no matter how long and good your main content is, you might still be at risk for being targeted by Phantom.

Now let’s get to the meat and potatoes!

Winners and Losers of the Phantom II Update

Here are the most important winners and losers of Phantom II:

.

| Phantom II Losers | Phantom II Winners | ||

| Domain | Loss | Domain | Gain |

| Weheartit.com | -87% | Outlettable.com | 579% |

| Upworthy.com | -72% | Religionfacts.com | 224% |

| Ehow.com | -56% | Imgur.com | 166% |

| Searchenginewatch.com | -42% | Vudu.com | 141% |

| Isitdownrightnow.com | -39% | Alexa.com | 130% |

| Wisegeek.com | -37% | Livestream.com | 129% |

| Examiner.com | -37% | Quora.com | 125% |

| Hubpages.com | -33% | Genius.com | 124% |

| Movieweb.com | -27% | Couponcabin.com | 119% |

| Rottentomatoes.com | -27% | Dealsplus.com | 119% |

| Offers.com | -26% | Stereogum.com | 119% |

| Foursquare.com | -22% | Thesaurus.com | 117% |

| Answers.com | -21% | Groupon.com | 116% |

| Pinterest.com | -15% | Qz.com | 114% |

| Linkedin.com | -15% | Epicurious.com | 112% |

| Merriam-Webster.com | -13% | Arstechnica.com | 110% |

| Wikihow.com | -11% | Amazon.com | 105% |

| Yelp.com | -10% | Webmd.com | 102% |

.

Don’t forget that these are relative values. If we sort the list after absolute values, Amazon is the clear winner, followed by alexa.com, thesaurus.com and genius.com! We already know that HubPages lost rankings/traffic. They stated a loss of 22%, but if you look at the development over three weeks, they really lost 33%!

What to do if Phantom II has impacted you negatively?

1. Indexation

If you are at risk of having too much thin content, try creating longer and more unique content, or try using filters and thresholds to noindex pages that maybe have less than a certain number of words.

2. Comments

Limit comments on negatively affected pages and use either a pagination or drop-out solution for the remaining pages. You need to iterate on the right amount here.

3. Ads

Decrease the amount of banner ads, popups, and self-starting videos above the fold. I know companies have to make money, but if no one visits the site or page to click on the ads, then all the content you created and the money that sponsors spent will have been for naught. It’s important to find a balance point.

4. 404

Check the Google Search Console for 404 and soft 404 errors, and make sure to get rid of them. The problem is mostly with internal links to pages that are no longer available or to pages with no results or content.

5. User Signals

Analyze your user signals on a page level and pin-point where people bounce. You need to figure out which pages people are happy with and which ones they’re not. This will give you insights into content quality and relevance, which you can then use to fix existing pages and create new pages.

Need assistance or interested in some more information? We are happy to help:

Get in touch or request a Software Demo

What are your thoughts about Phantom II. Have you been hit? Let me know in the comments!

.