In the “Big Data in SEO” series I examine the seven topics that are important for enterprise SEOs. This article looks at technical on-page optimization and targeting.

Since late 2011 the technical performance of a website has been a true ranking factor. And that doesn’t just mean speed. What matters is generating as few errors as possible. The Searchmetrics crawler with Big Data helps uncover the technical problems within large websites.

Essentially, a website’s technology only exists to prevent it from getting in the way of the Google robot which should be able to read and evaluate any URL as quickly as possible. That, however, is not so simple, particularly when it comes to large websites. There are two areas that quickly become confusing during on-page optimization:

- Site Optimization: (Almost) all websites have technical issues. They are linked to sites that no longer exist, canonical tags point to incorrect targets or the HTML document structure is unclear. These ‒ and many others ‒ are examined by our site optimization process. Our crawler examines up to 100,000 sub-pages for potential errors, warnings and messages.

- Keyword Targeting: In order for document “XY” to rank well for a given keyword, it has to show the Google robot what it is about. This includes the keyword for which it has greatest relevance and whether the internal linking also focuses on this keyword. Our crawler analyzes this for every keyword as well as the site that is to be ranked for it.

Site Optimization

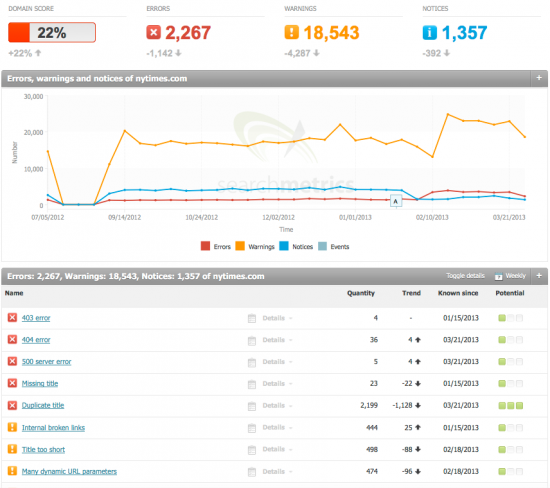

Even the New York Times technical team makes mistakes. In fact, quite a few. This can be seen in the Searchmetrics site optimization:

Here the total of all errors, warnings and messages can be clearly seen – things a developer should be taking care of. The graph also shows the history of these messages over time. Thus it is possible to clearly understand how the project has developed.

This data forms the basis of our crawler, which operates in every project domain that is entered and takes note of everything worth noting. To do this we check some 50 factors that play a part in the on-page optimization of a site. This includes dead links and other server errors. Other checks are, of course, whether the document metadata (title tag, description, robots meta tag, etc.) are properly completed and whether the document structure can be read with the headline formatting. There are, of course, many other factors that are important for SEO. For example, we inform the webmaster when there are too many outbound links on a page or when the URL is too long.

We check all these errors once a week and show them in the overview. And we do it with a prioritization for the optimization potential (right hand column in the table) and with the history so you can see the changes since the previous week.

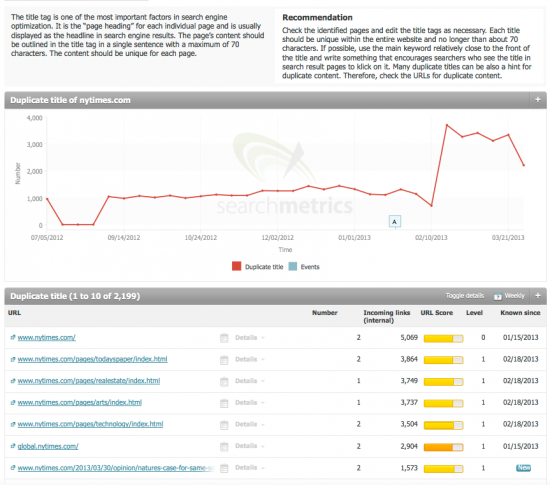

The detail page for the individual errors or messages contains not only an explanation and the history but also the necessary detail data:

If we find duplicated description tags, for example, we explain why that could be a problem and how to deal with it in the above space. And incidentally, duplicated title tags are a potential indication that content has been published more than once, which means that the page is in danger of holding duplicate content.

Here the history for the New York Times shows that in early February the number of duplicated description tags dramatically increased. It would be vital to discover the cause so that the problem could be addressed at its root.

There is a list of all doubled title tags with detail information below. Here the error in the home page can be seen; it is vital that this issue be resolved, as this page has 5,069 inbound (internal) links and is of extremely high relevance. The level of the page is also naturally a signal for the urgency of eliminating the error.

Hint: Continuous error removal

It is probably impossible to maintain a website completely free of errors. Keeping their number down is, however, important not only for SEO purposes, but also beneficial for visitors to the site. I would therefore recommend that technician capacity be reserved for dealing with these messages on a weekly basis.

Keyword Optimization

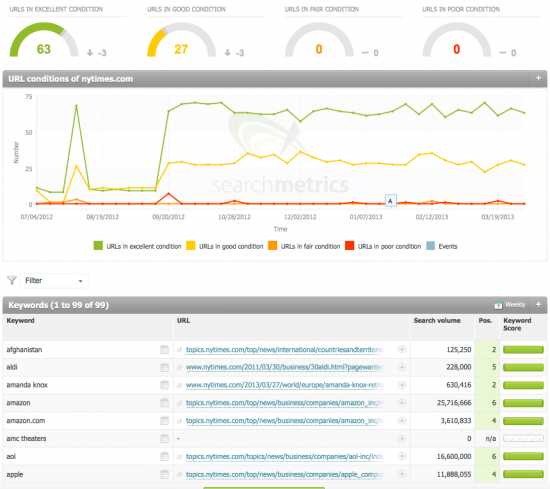

Keyword optimization takes up a different perspective. Here our crawler checks whether a URL really is optimally matched to the keyword by which it is found. To do this we monitor all URLs that already rank in the Google index for one of the keywords deposited there. Then we evaluate whether or not they are well matched:

Here the New York Times’ balance looks pretty good: There are 63 URLs in top condition, for which (almost) nothing can be further improved. And there are 27 URLs in a good condition, that is, with some potential for improvement. Below this we again find the history and a table with all saved keywords. In this table, search volume and positioning are used to make a clear definition between “important” and “not important.”

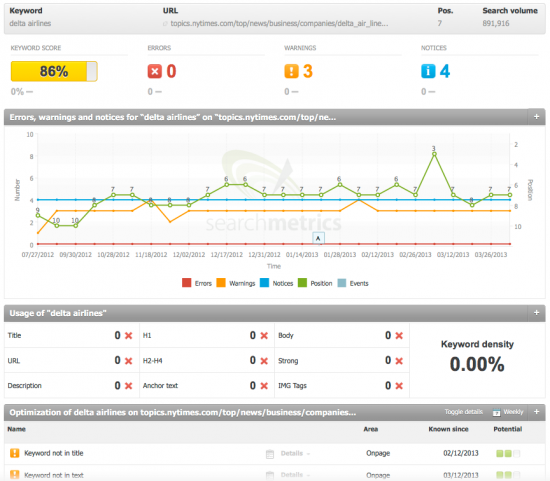

Taking a look at the details of an analysis of this kind allows you to quickly find relevant information.

The image above shows the keyword, the URL, the current position and the search volume. Under these we see all data relating to errors and warnings aggregated into a keyword score. In this example, incidentally, the solution is quite simple. The reason why the URL is not optimized is due to the way it is written: While Google users will search for this airline under “Delta Airlines,” the company actually calls itself “Delta Air Lines”.

This, however, is an exception. Keyword optimization usually reveals large potentials for optimization simply by writing the keyword into the headline or into the main text, depending on where it is missing. Another point, however, is the use of the keyword in internal links to this page. The Searchmetrics crawler checks this as well. Does the internal linking of this URL help its ranking? This can never be seen with the naked eye and without a crawler.

By the way, if the desired landing page does not (yet) have the highest ranking, this can also be easily defined for the crawler. The keyword optimization process will then indicate what has still to happen in order for this URL to rank for the keyword.

Hint: Rising keywords and declining keywords

Here again, the detail work is never done. I would therefore recommend – especially for rising keywords (i.e. search terms in positions 10 to 20) and the terms that have fallen over the course of the week – that you look more closely at what could still help with keyword targeting once per week.

The on-page trick: Detail work, well weighted

On-page SEO is detail work. It is therefore important not to make it unnecessarily complicated and become preoccupied with unnecessary details. This is why we distinguish the important tasks from the unimportant by means of indices, search volumes, relevance data and other sorting aids. This is the only way of ensuring with a large website that not only things are done right, but that the right things are done.

Next week we will show you more about: Return on investment: paying attention to the conversion.

Series: How top companies handle big data in SEO

On our website you can get the complete Big data-series as complete eBook. Download now!

- Promoting productivity: managing international teams and agencies. Large quantities of data require a very fine allocation of rights. For reasons of data protection on the one hand, but also for quality reasons: If everyone can do whatever he or she wants to, you end up not really knowing what’s inside the big data pot. The Suite allows you to perform these tasks in a simple and user-friendly way thanks to the allocation of rights.

- Quick overview: managing different campaigns in a structured way. Large companies always pursue multiple goals at one time. These goals can be pursued individually using the features of the Searchmetrics Suite, such as tagging and multi-tagging. But the Suite also lets you adapt and automate every report and every chart.

- Observing competition: learning from your competitors. Competition is sometimes the biggest surprise in online marketing. Our offline competitors are potentially only marginal online competitors, whereas our offline partners are actually our toughest competitors. We provide just the right environment in numerous data pools.

- Pick the cherries: tapping into hidden potential. It’s not always worthwhile to work on the keywords with the largest number of searches. Competition, universal search and existing ranks also play an important part.

- Improving performance: technical optimization. Sometimes even the best SEO gets muddled up when dealing with a large page. We crawl every page and report error pages and optimization potential for keywords.

- Return on investment: paying attention to the conversion. Ultimately, it’s about the money. Is a PPC campaign worth it or would the budget be better allocated to SEO optimization. What’s more worthwhile? We supply figures to help you make this decision.

- Optimizing processes: saving time and money. One employee on the team needs a daily report, another one a monthly summary. Our reports are highly flexible, relevant and can be generated with just a few clicks.

So you see: There’s a lot to be done. Big data isn’t just marketing hype or a simple glance into a crystal ball filled with data. Big data is a necessary and effective way of working that is simply part of enterprise SEO and has to be learned. We help you with it. Stay tuned!