It’s the biggest change to Google’s algorithm for five years, affecting one in ten search queries. With the Google BERT Update, Google aims to improve the interpretation of complex long-tail search queries and display more relevant search results. By using Natural Language Processing, Google has greatly improved its ability to understand the semantic context of search term.

If you are looking for support with the search engine optimization of your website, you can get more information and analysis from our experts:

Searchmetrics’ two cents on the Google BERT Update

“Bert is a logical development for Google, following in the footsteps of Panda, Hummingbird and RankBrain. However, this time we’re not looking at a change in the way data is indexed or ranked. Instead, Google is trying to identify the context of a search query and provide results accordingly. This is an exciting addition to what context-free models like Word2Vec and GloVe are able to offer. For Voice Search and Conversational Search, I would expect to see significant leaps forward in the quality of results in the near future.” – Malte Landwehr, VP Product, Searchmetrics

“Bert is a logical development for Google, following in the footsteps of Panda, Hummingbird and RankBrain. However, this time we’re not looking at a change in the way data is indexed or ranked. Instead, Google is trying to identify the context of a search query and provide results accordingly. This is an exciting addition to what context-free models like Word2Vec and GloVe are able to offer. For Voice Search and Conversational Search, I would expect to see significant leaps forward in the quality of results in the near future.” – Malte Landwehr, VP Product, Searchmetrics

Where has BERT been rolled out?

While BERT initially only used Google.com’s organic search results, since December 2019 BERT has rolled out more than 70 languages worldwide. For Featured Snippets, which are displyed over the organic search results as position 0 with text, table or list, BERT has already been used in all 25 languages for which Google also displays Featured Snippets.

BERT is rolling out for the calculation of the organic serach results in: Afrikaans, Albanian, Amharic, Arabic, Armenian, Azeri, Basque, Belarusian, Bulgarian, Catalan, Chinese (Simplified & Taiwan), Croatian, Czech, Danish, Dutch, English, Estonian, Farsi, Finnish, French, Galician, Georgian, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Icelandic, Indonesian, Italian, Japanese, Javanese, Kannada, Kazakh, Khmer, Korean, Kurdish, Kyrgyz, Lao, Latvian, Lithuanian, Macedonian Malay (Brunei Darussalam & Malaysia), Malayalam, Maltese, Marathi, Mongolian, Nepali, Norwegian, Polish, Portuguese, Punjabi, Romanian, Russian, Serbian, Sinhalese, Slovak, Slovenian, Spanish Swahili, Swedish, Tagalog, Tajik, Tamil, Telugu, Thai, Turkish, Ukrainian, Urdu, Uzbek & Vietnamese.

In this tweet, Google announced the global rollout of BERT:

BERT, our new way for Google Search to better understand language, is now rolling out to over 70 languages worldwide. It initially launched in Oct. for US English. You can read more about BERT below & a full list of languages is in this thread…. https://t.co/NuKVdg6HYM

— Google SearchLiaison (@searchliaison) December 9, 2019

Meanwhile, Webmaster Trends analyst John Mueller has spoken in one of his Google Webmaster hangouts after a user reported 40% decline in traffic and suspected BERT to be the cause. Mueller explained that BERT was not responsible for such rankings and traffic decreases, but one of the regular updates or a core update. According to which criteria the algorithm changes are made, Mueller explains detailed how development at Google works from 30:46 minutes in the video:

What does BERT mean?

The abbreviation, ‘BERT,’ stands for Bidirectional Encoder Representations from Transformers and refers to an algorithm model that is based on neural networks. With the help of Natural Language Processing (NLP), machine systems attempt to interpret the complexity of human language. You can find a detailed documentation of BERT on Google’s AI blog.

Simply, put, Google uses BERT to try to better understand the context of a search query, and to more accurately interpret the meaning of the individual words. This breakthrough is built on mathematical models called Transformers: These analyze a word in relation to all the other words in the sentence – or in the case of Google search the search query – and do not simply look at the meaning of words in isolation. This is particularly useful when interpreting the meaning of prepositions and the position of individual words within a search query.

Why is the BERT Update so important for Google?

According to Google, around 15 percent of all search queries are new – that means they are being searched for the very first time. Furthermore, the phrasing of search queries is growing closer and closer to real human communication – in part under the influence of technical advances like Voice Search. The statistic service Comscore experts the proportion of Voice Searches to hit 50 percent within two years. Another factor is the increasing length of search queries – today, 70% of searches can be considered long-tail. People turn to Google with fully-formulated questions, and expect precise answers in a fraction of a second – and BERT now makes up a significant part of the technology that makes this possible.

For many years now, Google has been working on neural networks that can correctly respond to new search queries and improve the interpretation of content:

- Hummingbird: In 2013, Hummingbird was incorporated into the Google Algorithm. This algorithm update made it possible to better interpret entire search queries, rather than just searching for the individual words within a query.

- RankBrain: In 2015, RankBrain became part of Google’s algorithm and was declared to be the third-most important ranking factor. This made it possible to process search terms with multiple meanings, or otherwise complex queries that go beyond the normal long-tail search. With RankBrain, it also became possible to process first-time searches, colloquialisms, dialogues and neologisms.

Which search queries are affected by BERT?

BERT’s impact affects long-tail search queries. BERT improves the interpretation of context for longer queries that are entered (or spoken for Voice Search) into the search bar as a question or a group of words.

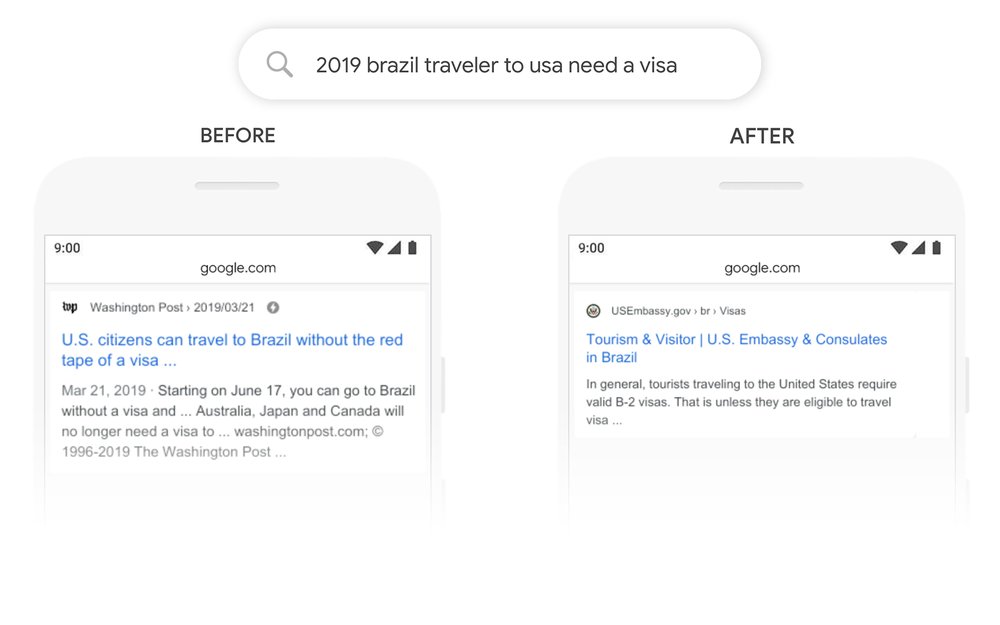

On their blog, Google provided a few examples of search queries that BERT helps to understand better and for which the search engine is now provide more relevant results.

In this example for an organic search result, according to Google, the importance of the word “to” and its relationship to other words were previously underestimated. However, the word “to” plays an integral role in the meaning of the sentence. We are dealing with someone from Brazil who wants to travel to the USA – not the other way around. The new BERT model makes it possible for Google to correctly understand this distinction and provide results that correspond to the true search intent.

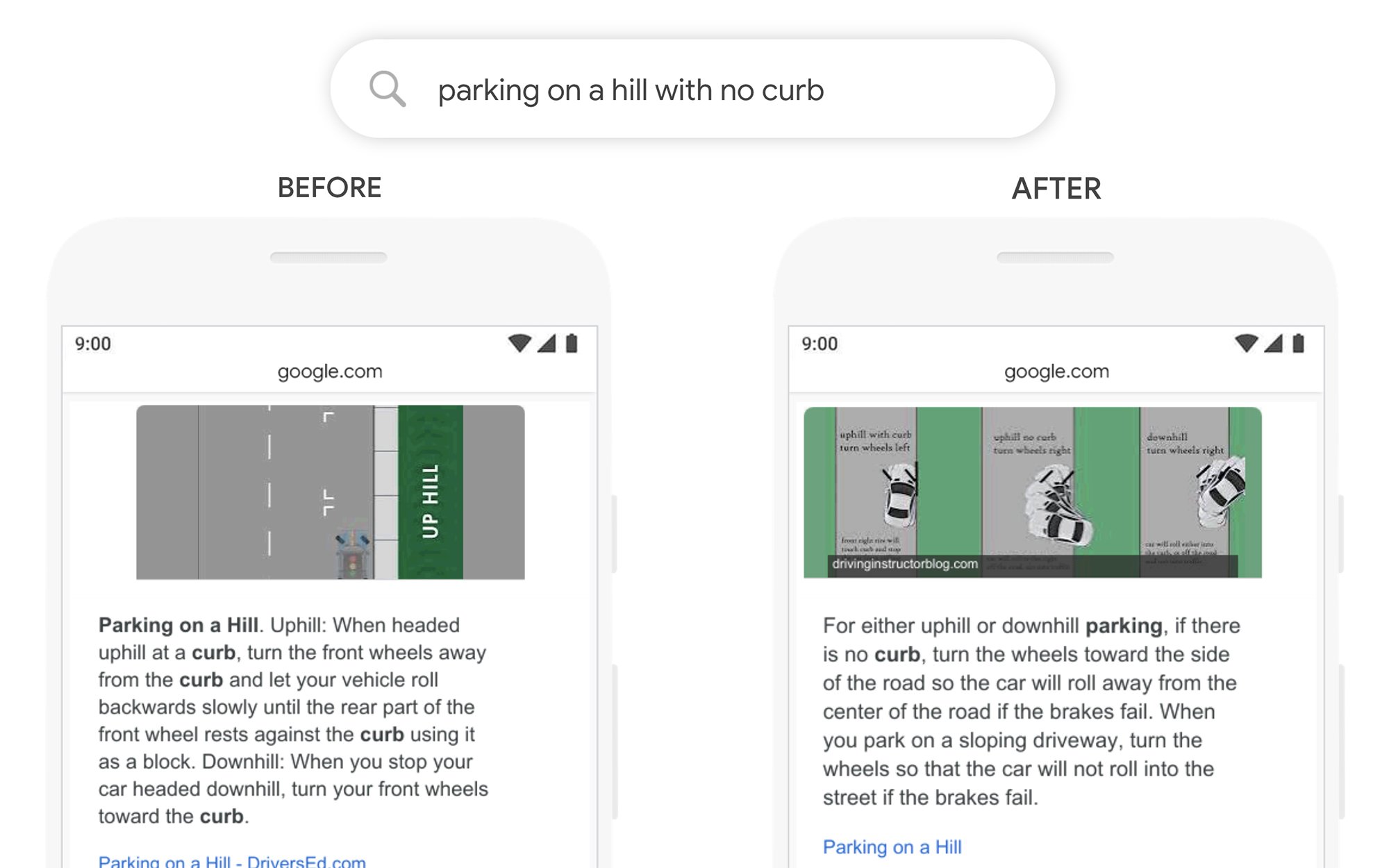

Example 2: “Parking on a hill with no curb”

In this example from Google’s blogpost, which deals with evaluating the search results to choose the most relevant Featured Snippet, the focus used to be placed too heavily on the word “curb”, ignoring the importance of the word “no”. This meant that a Featured Snippet was displayed that was of little use, as it actually answered the opposite question to that being posed by the searcher.

What can SEOs and webmasters do?

There is no simple answer to how to react to BERT. There aren’t any easy tactics that you can use to suddenly make your website rank better or to recuperate losses. Instead, it is important to bear in mind that you need to write your content and build your websites not just for algorithms, but for people: for your potential users and customers who will be visiting and interacting with your website.