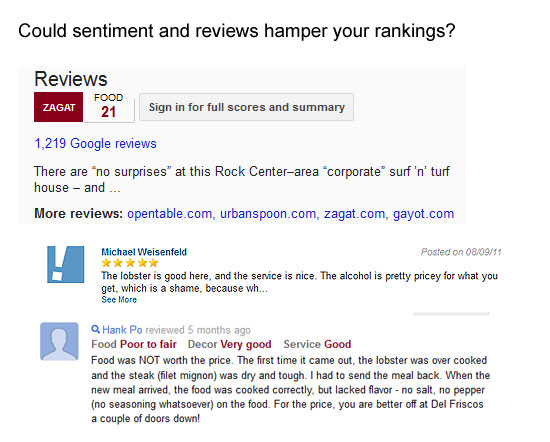

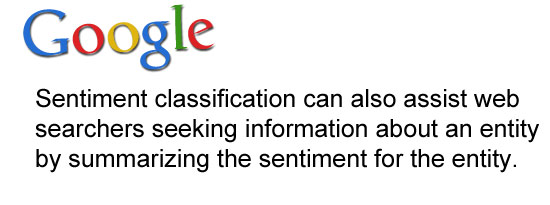

Last week we looked at how Google might look at and use the social graph in search and advertising. People are indeed an ever growing source of signals that can be mined. That’s fairly obvious. Another area that this could connect of course is in reviews, or more specifically, sentiment.

How might a search engine deal with this though? In fact, it’s always been one of the harder areas for them to deal with over the years, but they keep on trying. The last time I’d really touched on this was back in 2011 with; How does Google handle reviews and sentiment?

This week though, there was an interesting patent award (to Google) that again touched on this area, so that’s what we’re going to get into today. The patent in question is;

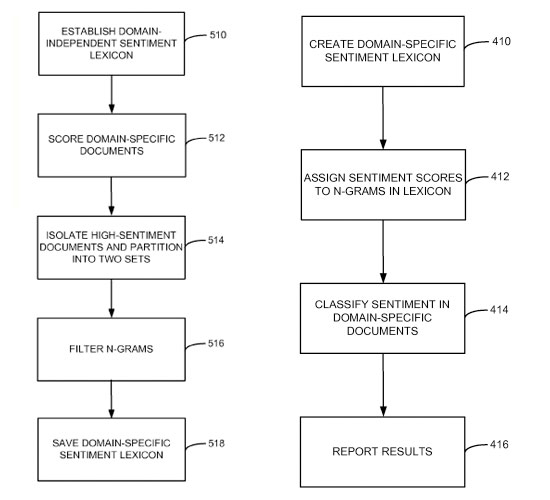

Domain-specific sentiment classification ; Filed June 17, 2011 – Awarded; January 15 2013

What seems to be the problem?

For starters, let’s address the most troubling aspect; terminology cross over. It was explained well with,

“(…) it does not account for the sentiment expressed by domain-specific words. For example the word “small” usually indicates positive sentiment when describing a portable electronic device, but can indicate negative sentiment when used to describe the size of a portion served by restaurant. Thus, words that are positive in one domain can be negative in another. Moreover, words which are relevant in one domain may not be relevant in another domain. For example, “battery life” may be a key concept in the domain of portable music players but be irrelevant in the domain of restaurants. This lack of equivalence in different domains makes it difficult to perform sentiment classification across multiple domains. “

This truly highlights one of the core issues. What is a positive sentiment in one instance, may not be in another. To deal with this they set about assigning a domain-specific sentiment lexicon that can be used on documents of a specific nature.

Another major issue is of course, vetting the reviewers. But I covered that last time, so we’ll stay on this track for today.

Now, before you get too far ahead, they describe a ‘domain’ as a particular sphere of activity, concern or function, (such as restaurants, electronic devices, international business, and movies). It does not specifically refer to Internet domain names.

The nuts and bolts

They define tracking sentiment for various entities, including;

- companies,

- products,

- and people.

And the sentiment as being;

- positive,

- negative,

- or neutral (i.e., the sentiment is unable to be determined).

And the documents housing the sentiment as;

- web pages and/or portions of web pages

- the text of books

- newspapers

- magazines

- emails

- newsgroup postings

- and/or other electronic messages

Which in itself is an interesting collection. The ’emails’ part would be of particular interest to the tinfoil crowd out there. I personally just think they’re coverings their backsides with the list. It was important to at least highlight as far as getting your head space past mere web pages.

“For example, the documents in the domain-specific corpus can include documents related to restaurants, such as portions of web pages retrieved from web sites specializing in discussions about restaurants. Likewise, the domain-specific documents in the corpus can include web pages retrieved from web sites that include reviews and/or discussion related to portable electronic devices, such as mobile telephones and music players. In contrast, the documents in the domain-independent corpus can include documents associated with a variety of different domains, so that no single domain predominates. In addition, the documents in the domain-independent corpus can be drawn from sources unrelated to any particular source, such as general interest magazines or other periodicals.”

So, sentiment isn’t always going to be about mere review sites. Sure they’re considered, but not the only place to look. And of course, we can also go back to last week’s article and consider how the social graph might play into this as well.

The Approach

They looked at creating a domain specific classifier, (again, domain isn’t a website, but conceptual space). Essentially there would be sentiment terms for say, a website about “search engine optimization’. Domain doesn’t mean ‘Searchmetrics.com’. Ya follow?

Thus a website such as ours, might have more than one domain classification. This makes obvious sense for documents that cover multiple topics (think of a hub page of a news paper website). Certainly this would be a huge processing element, so as with many things in information retrieval, they discuss using training documents in the process.

Where does one find the lexicons? Hard to say, but in the patent they mention;

“In one embodiment, the domain-independent sentiment lexicon is based on a lexical database, such as the WordNet electronic lexical database available from Princeton University of Princeton, N.J. The lexical database describes mappings between related words. That is, the database describes synonym, antonym, and other types of relationships among the words.”

And then…

“(…) the administrator selects initial terms for the domain-independent sentiment lexicon by reviewing the lexical database and manually selecting and scoring words expressing high sentiment. The administrator initially selects about 360 such words in one embodiment although the number of words can vary in other embodiments. This initial set of words is expanded through an automated process to include synonyms and antonyms referenced in the lexical database. The expanded set of words constitutes the domain-independent sentiment lexicon. ”

Which is interesting in that it is part manual and part automated. I wonder if the Google reviewers get into the action with this kind of stuff?

They even gave some examples of using sites in the training element such as;

- popular product review web sites

- Amazon

- CitySearch

- Cnet

Again, just examples… this was done back in 2011.

“These sites include textual product reviews that are manually labeled by the review submitters with corresponding numeric or alphabetic scores (e.g., 4 out of 5 stars or a grade of “B-”).”

Why does it matter?

Again, I would start to look back at our last post on the social graph. Sure, this kind of scoring method would be important to be mindful of in spaces such as ecommerce, local and brand/authority building, but if they manage to align it with other algorithmic elements such as the social graph, it could play a large roll in your search visibility on a personalized scale as well.

With so many search marketers wary of whom links to them of late, you might also want to be aware of who’s talking about you and in what context. I’d venture to say that it gives a bit more weight towards buzz monitoring, beyond just finding targets for your next link building campaign.

And of course all of this should hopefully start to drag you back from the abyss of the link graph myopia. There’s so much more to what’s going on at Google than just links. Embrace social graphs, entity associations, sentiment, knowledge graphs and their kind. Then maybe (your) SEO won’t die, it will evolve.

More Reading;

- How does Google handle reviews and sentiment?

- Large-Scale Sentiment Analysis for News and Blogs (PDF)

- Monitoring algorithms for negative feedback systems

- Google’s Review Search Option and Sentiment Analysis

- Google’s Sentiment Phrase Snippets for Google Places