For the normal world, a Panda is just an animal, for SEO folks it’s a diagnosis. In this post, I will briefly talk about how Panda works, but the nitty gritty of it is actually about how to get hit by a Panda algorithm penalty. You may ask, “why would you want that Kevin?” Well my lad, when you know what you should NOT do, you get a better picture of what you actually SHOULD do. I also strongly believe that by doing everything you can to avoid being hit by Panda, you set your website and company up for sustainable growth and SEO Success. So learn how to turn these five factors around and you’ll have a recipe for success when it comes to preventing a Panda penalty.

Picture Source: http://yourbrandlive.com/assets//images/blog/great_success_brandlive.png

Let’s take a brief look at how it works: Panda is a filter that is supposed to prevent “low quality” sites from ranking. The logic behind that works as follows: each indexed page or group of URLs (site or folder, which is also why only a directory of your site can be affected, instead of the entire site) receives a relevance score for a query. Navigational queries are an exception. Since their relevance is clear, Google does not modify the search results. Generally, search results are filtered by relevance and displayed for the user in the SERPs. However, before a user receives the results of a query, they’re sorted by Google’s criteria (e.g. the famous ranking factors). It’s important to understand that quality is continuously measured, which again has an impact on the search results. Think of Panda as a self-assessing system. We’ll talk about the five determining factors that come into play during this self-assessment for relevance and quality in a minute.

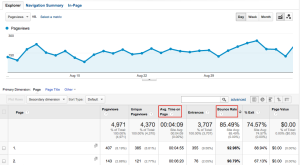

It’s also important to know that Panda does not only punish, but also rewards. Take a look at this screenshot to understand what I mean:

Notice how the website started to drop at the end of November 2012 until May 2014? On November 21, 2012 the 22nd Panda refresh took place. The impact of this refresh is evident based on their decrease in visibility. However, on May 19th Panda 4 was rolled out. The displayed site was able to recover because it avoided the five factors we’re about to talk about.

On the other hand, if you don’t follow these five factors, this might happen:

But of course you wouldn’t want to do that ;-).

From this video, “How does Google use human raters in web search?”, we know that Google Quality Raters play an important role in determining thresholds used by Panda. The newest insights from a recently leaked version of the Google Quality Rater Guidelines are also in perfect accordance with the three overall determining factors regarding high-quality websites: expertise, authoritativeness and trustworthiness. This is quite an abstract model, so I want to provide a more tangible approach in this article.

Introducing the five wonderful ways to get hit by Panda:

1. Bad Content

The first thing to do, if you want to get hit by Panda, is to have bad content. I have separated this into five categories:

-

Thin:

Imagine you have a category page with only a few lines of meaningless text and hundreds of links to products. This is what we call thin content. Search engines need “food” aka content, to determine the relevancy of a page for a query. All they can read is text – little text, little food. If you barely provide any information that’s accessible for a search engine, how are they able to understand what the page is about? The same goes for a user on your site.

-

Spun / automated:

Very often, bigger sites / brands deal with higher amounts of pages that need to be filled with content. One easy way is to automate that content, e.g. by writing a boilerplate text that’s the same on each page except for a few variables. Search engines do not like this, just like the users don’t. We call this spun / automated content. Of course you cannot always write a unique text for each and every page, but it is vital that you make sure to individualize content. You should aim to provide users with as much tailored content as possible.

-

Aggregated:

Another popular way to fill a large number of pages with content is to aggregate content from other sources. As we wrote in our Panda 4 analysis, aggregator sites of news, coupons, software and price comparisons were heavily punished by the algorithm. While it makes sense to partially display content from other sources, it should only be to enrich your unique content, not replace it.

-

Duplicate:

When content on many sites or pages are too similar, we’re looking at duplicate content. This has been a ranking-killer for years and we have weapons like canonical-tags and meta=noindex to fight it, but it still appears. While it’s difficult to rank at all when having a lot of duplicate content, Panda will punish sites that have it at a large scale.

-

Irrelevant:

When users do not find an answer to their question on a page, they leave. A page might seem relevant for a query, but falls short with what’s actually promised by the title and content for example. Imagine a page with text about a recipe for spaghetti that’s only about how well it tastes, where spaghetti actually came from and what it looks like. It contains the keyword “Spaghetti recipe” in the title, it comes up often in the content and even semantic topics are covered, but the page doesn’t contain the actual recipe. In this case, we’re speaking about irrelevant content, at least for the query “Spaghetti recipe”. Here, Google would also be able to determine the relevancy by analyzing user signals for this page; which brings me to the second way to get hit by Panda.

I would also categorize old content as irrelevant. Imagine a text about a topic that needs to be continuously up to date, such as “iPhone”. Writing about the first and the sixth one is like wearing two different pairs of shoes at the same time.

Tip: A good way to determine “bad content” is to look at how many pages of your site are indexed versus how many pages actually rank.

2. Bad User Signals

As you might have read in our recently released ranking factor study, Google pays a lot of intention to user signals. We usually focus on the four most significant ones. So if you want Panda to be mad at you, make sure to have the following:

-

Low Click Through Rates

Just because you rank high, does not necessarily guarantee that people will click on your site. I’d suggest checking exactly this. What it tells us if you have a low click through rate is that either your snippet doesn’t promise what the user is looking for or something else in the SERPs has gained their attention.

-

High Bounce Rate

This is determined when the user clicks on your snippet and then quickly bounces back to the SERPs. This is a tricky one, because for some results the actual time on site is legitimately meant to be low – imagine someone is looking for a sports game score. My assumption is that Google is able to distinguish between queries that require a low bounce rate and those with a higher one.

-

Low Time On Site

Users aren’t spending a lot of time on your site in general. This is an indicator of low quality content.

-

Low amount of Returning Visitors

Users are not coming back after visiting your site. Good content is consumed again and again (think of “evergreen content”), but most importantly you want users to come back to your site as much as possible after they have discovered it. This is what determines high quality content in Google’s eyes.

If you want to make sure these four metrics are in place, check them regularly and compare them to the overall value of your site. For example: you want to know if a bounce rate for a page is high or low. Let’s say it’s 65%. Is this too much or okay? In this case, I’d recommend you identify the average bounce rate for your website and then measure this value against the value of a specific page.

Tip: Identifying and optimizing / reworking pages with high bounce rates is a crucial task for SEOs. In the third point we’ll talk about how to actually optimize them.

3. Bad Usability

Usability and user experience are each their own science, but being an SEO in 2014 means you need to deal with and understand these topics. While you don’t have to implement multivariate user testing for colors (even though I recommend it), you should make sure these following factors are poorly represented on your site if you want to get smacked in the face by Panda:

-

Design / Layout

Having a really old or simply ugly design is an invitation for users to bounce. While “ugly” is very subjective, I mostly refer to a design that looks like one used by spammy sites, even though the site is not. Constantly redeveloping your site and regularly doing a refresh and/or even a re-launch is a good start for user experience optimization

-

Navigation

Providing a structured navigation is an absolute must-have in order to optimize the so called “user orientation scent”. While it’s of course crucial for internal linking optimization and therefore the flow of PageRank through your site, users must be able to find all products, content and even use the navigation instinctively.

-

Mobile version (redirects)

I don’t have to tell you how important “mobile” is, as you can read that all over the place. But in terms of user experience, I’d recommend you make sure mobile users are being redirected to the mobile version of the page. It’s important they are not being redirected to either the home page or a 404-error page. Of course, a requirement for this would be to have a mobile version of your site. As Avinash Kaushik, Google Analytics evangelist, expressed so nicely: “If you have a mobile version of your site, you’re now ready for 2008”.

-

404 errors

Every site is supposed to have 404 errors, e.g. when a user enters a URL that doesn’t exist or is not available anymore (for this issue there are nicer solutions). When users experience too many 404 pages or when they’re not optimized they will leave the site. To avoid this, optimize 404 error pages by providing the user with the answer, like a way to refine his search or navigate somewhere else on the site.

-

Meta-refreshes

A meta-refresh usually leads the user to another site after a couple of seconds. This is not only annoying, but also confusing. Don’t confuse your users.

-

Site speed

Site speed is an official ranking factor, because it is an essential component of the user’s experience. Even if you have the best content available, if your site takes minutes to load, you cannot guarantee that users will stay. I could write an entire blog post around optimizing site speed, but for now you just have to know that it is important and should be optimized.

-

Ads (even though primarily targeted by PLA / top heavy, it plays into panda)

I don’t recommend you stop using ads to make money, but I want to make you aware that having too many ads will lead to problems. If you place too many ads above the fold or in the text, respectively Panda the “top heavy” algorithm will give you very awkward looks. The reason behind this is it’s simply annoying to users. Have you ever entered a site and suddenly ten layers of pop ups try to sell you something before you have even had a chance to read the text on the page? It’s like walking through a store and constantly being stop and asked “do you want to buy this?”, “why don’t you buy that?”, “buy already!”. How can that be a good user experience?

-

Flash

Flash and other formats that do not work on all common devices should be avoided. Sorry, Flash.

Of course a good user experience will have a direct impact on user signals. In the end, UX also determines whether a site is trustworthy or not, and in today’s Panda world, trust plays a special role.

4. Low trustworthiness

If you want users to stay and actually come back, your site has to be trustworthy. This is more important if you have a site within the financial industry, but it comes with general advantages for everyone. Proving trustworthiness leads to users recognizing your brand – even to a degree at which they’d click on your snippets just because they see your domain under the title.

Trustworthiness plays an important role on several touch points and starts early in the customer journey:

- Snippet

- First impression (Design & Layout)

- Content

- Conversion

In 2012, Danny Goodwin wrote an article on Search Engine Watch about the Google Quality Rater Guidelines and how page quality has been added to them. They also contain questions like “Would I trust this site with my credit card?“.

There are a couple of knobs you can turn to improve the trustworthiness of your site:

- Provide contact information and address

- Integrate trusted shop symbols and security seals

- Provide privacy policy

- Display reviews (internal and external)

- Show testimonials

- Avoid bad spelling and grammar

- Have your site connecting via HTTPS

- Show your client portfolio

- Display press mentions and awards

- Provide an “about us” page with biography / company history

- Avoid heavily disturbing / interruption ads

If you need inspiration from well-trusted sites, look at top players like Amazon or eBay. The more you optimize your site towards being trustworthy, the higher chance you have for seeing conversions. But don’t get too carried away as you can also optimize too much, which brings us to the last point.

5. Over optimization

Optimizing “too hard” can definitely lead to a penalty. In most cases this is referred to as a “manual spam penalty”, but it can also play into Panda. I have met two main “spam” playgrounds in my career: content and internal linking.

Over optimized text for example can easily be detected by simply reading it. Often you can immediately “feel” the difference between a naturally written text and one written for search engines. Of course you have to include the keyword that you optimize for in the text, but the times of keyword density are long over. Don’t stuff the text with keywords, just make sure it occurs a couple of times (naturally) in the text, headings, title, description and URL. It is much more important to cover semantically relevant topics and be comprehensive.

For internal linking, over optimization would consist of too many internal links and the use of a hard anchor text. As with backlinks, there are thresholds search engines use to determine whether an internal link profile looks natural or optimized. An example of an over optimized internal linking profile would be using the term “buy cheap iPhone 6” on every page to link to your product page. If you don’t go overboard, you should be fine.

To wrap it up: If you’d like to experience getting hit by Panda and lose approximately 50% of your traffic and therefore revenue, make sure your site has the five points I mention above. If you’d like to improve your success (and avoid Panda), fulfilling Panda requirements for high-quality sites will actually be a way to achieve sustainable rankings in the long-term. To do this, make sure your site has these five factors in place: quality content, user signals, user experience, trust and optimization.