The ‘Farmer Update’ reminded me of my promise from the beginning of the year to come back to a few of the topics from Agenda 2011. And right now the Farmer Update is raising questions regarding the number of useful subpages that can be found in the index. My tip from two months ago: “Tip #5: Which entry and navigation pages are truly ‘unique’ with their own content and use for the users? All others should be taken out of the index with appropriate devices.”

The ‘Farmer Update’ reminded me of my promise from the beginning of the year to come back to a few of the topics from Agenda 2011. And right now the Farmer Update is raising questions regarding the number of useful subpages that can be found in the index. My tip from two months ago: “Tip #5: Which entry and navigation pages are truly ‘unique’ with their own content and use for the users? All others should be taken out of the index with appropriate devices.”

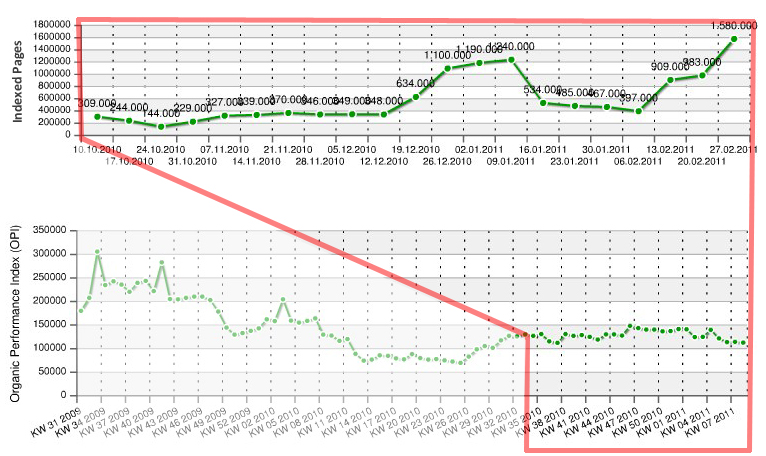

As an illustration, here are the graphs for the site of a client, who of course cannot be named. In the upper section we can see the changes in the number of indexed pages since last summer. In the lower part we can see the development of the site in the organic index. Watch out – the time intervals for the curves are different! I have tried to make this clear using the red frame.

And what do we see? Actually the opposite of what many expect – the more pages in the index the worse the visibility in the organic index. Ooooops? How did that happen? Quite simply – whoever deters the Google robot from its work (processing sensible, keyword-optimized pages) by troubling it with useless pages lacking in unique content, has to live with the consequences of their actions. This kind of thing wastes the robot’s time and can potentially annoy it. Let’s assume for the sake of simplicity that Google calculates an average quality value for all subpages and classifies the domain accordingly as a content farm, article directory or quality site. Unlikely? Well, we shall see in the next weeks.

In any case, it is clear that we should only submit pages to the robot for processing that we also want to have in the index. This does not include:

- Pages with redundant parameters in the URL (session IDs, referrer IDs etc.)

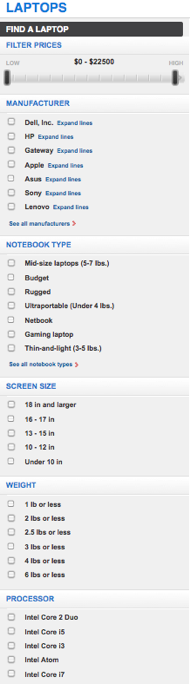

- Extensive product lists from various filter criteria

- Paginated pages in categories

- Product pages with variations (in the case of an otherwise identical t-shirt in 15 different colors)

- Through theoretically conceivable permutations, but meaningless pages – when, for example, the long tail is addressed through a combination of attributes, some combinations are just not sensible.

We can use the following methods to deal with these pages:

- Don’t let them appear in the first place (filter permutations beforehand; session IDs never belong in URLs!)

- ‘noindex’ robots meta tag: this tells the robot that this page should not be indexed. This is definitely the case for large product lists. But don’t forget – some of these lists are useful, so don’t just noindex all of them!

- “noindex, follow” in the robots meta tag: With this tag, we forbid the robot from including the page in the index, while telling it that is should still follow the links coming from it. Do this for some paginated pages and always when these pages are responsible for the distribution of link juice.

- Use of the canonical tag in meta tags: We do this whenever a different page is actually meant. As in the case of the many different t-shirts that vary only in one minor detail. Or when the system sticks some kind of parameter onto a page. In this case, I would create a canonical tag for the ‘real’ URL.

- robots.txt: if we want to exclude entire directories (‘/search/’) from the index, then we can also specify this in the robots.txt. However this won’t work, for example, for pages that with external links. These will remain in the index. But as in the case of the ‘search’ example this is not always a bad thing…

- ‘Parameter handling’ in Google Webmaster Tools: here you can set the above mentioned parameters to ‘ignore’ or even ‘not ignore’. This works quite well – however I don’t recommend that you do this and then simply hope that everything will be ok. That would be a mistake.

So anyone who now believes that none of this is of interest to them should check again whether they have any duplicated or useless pages in the index. Grab a small piece of text from a suspicious page and search for it in combination with the site: command (ie: “site:domain.com text snippet”). If a filter message appears on one of the SERPs page then it’s time for a site consolidation.

P.S.: Who’s writing this stuff? My name is Eric Kubitz and I am one of the co-founders of CONTENTmanufaktur GmbH Anyone trying to reach me can do so via e-mail (ek@contentmanufaktur.net) or on Twitter. ‘Til next time!