“Oh Lucas, you’re such a tease,” Lady Colebrook replied. Helena had a vision of her thrusting out her well-endowed cleavage by way of offering an inducement. “Do you like to see me beg, is that it?”.

-Excerpt from To Save a Sinner by Adele Clee

It’s not very often that romance novels and artificial intelligence get to share a day together in the spotlight. In May, that changed. In an effort to give Google’s artificial intelligence software more input into how people really communicate, the company began feeding it a steady diet of romance novels – nearly 3,000 of them.

Weird? Yes. Odd? Definitely so. But there’s a method to Google’s approach. In a paper published by Google Brain researchers titled “Generating Sentences from a Continuous Space,” we gain some valuable insight into Google’s thinking. Consider it a new take on the old proverb: “Give an AI a tweak, you feed it for a day; teach your AI to fish and you feed it for a lifetime.” The researchers threw plenty of chum into the water, hoping to teach the software a thing or two about the nuances of natural-language conversations and the creating its own connections from them.

The Role of Deep Learning

Deep learning is getting a lot of attention in the search and content marketing communities these days, thanks in part to Google’s reveal in 2015 that it uses a deep learning algorithm called RankBrain to help improve results. An improvement upon traditional machine learning, RankBrain and other deep learning techniques try to fill in the potholes on the road to artificial intelligence by using patterns from a massive database of queries and other information to interpret other queries that traditional software can’t make sense of. That could include anything from ambiguous search queries and colloquial terms to voice search and conversational speech. The goal is simple: think like a human talks and thinks to better understand what they mean when interacting with them

It’s set off a scramble among online marketers to understand just how Google’s deep learning efforts, as well as those from Facebook, Apple, Intel and others will affect how they create and optimize content. At stake are billions of dollars in online advertising and sales.

Change most certainly is coming. After Google Brain researchers “trained” the AI by feeding it romance novels (among other genres), it was able to make more natural connections and progressions between sentences.

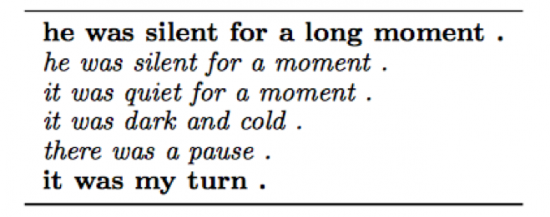

Take this example. Researchers gave the AI two sentences: “he was silent for a long moment,” and “It was my turn.” They wanted to see if, after “reading” the romance novels, the AI engine could produce sentences that naturally bridged the progression between the two.

Turns out, the AI didn’t do a half-bad job. It was able to find sentences that differed slightly in meaning, but sentences that made sense, given the sentence before and after it. In other words, it was able to find natural, sensible progressions between the sentences.

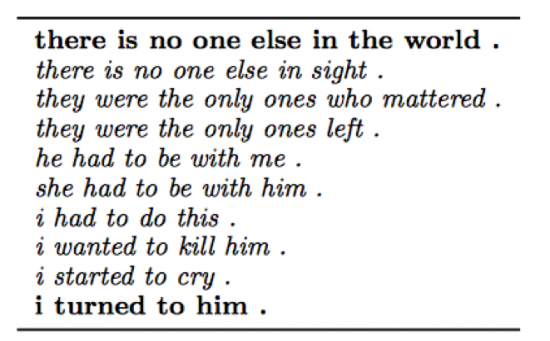

Here’s another example. Starting with the sentence, “There was no one else in the world,” and ending with “I turned to him.” Google’s AI came up with the following:

Sure, it may sound like bad poetry, but it’s clear that the experiment taught Google’s AI engine a thing or two about connecting ideas in a logical sequence.

But to what purpose? Why would Google’s AI need to have this capability? According to the paper, “the standard recurrent neural network language model (rnnlm) generates sentences one word at a time and does not work from an explicit global sentence representation.” In other words, an AI engine (also known as a neural network) would normally look at a sentence as a sum of its parts (i.e., its individual words), and nothing more.

By feeding the engine natural language (such as that found in romance novels), the hope is to expand its capabilities of vocabulary and knowledge as a result of “normal” conversations. Andrew Dai, Google software engineer and project lead, says the ultimate goal behind the this project is to help Google understand the sentiment and meaning behind queries, rather than just responding with facts.

In an email to The Verge, he writes, “It would be much more satisfying to ask Google questions if it really understood the nuances of what you were asking for, and could reply in a more natural and familiar way…”It’s like how you’d rather ask a friend about what to do at a certain vacation spot, instead of calling the [vacation spots’] visitor center…”

This is all well and good, but you might be wondering why romance novels, in particular. In doing some reading myself (purely for research purposes), I can confirm that the plots found in typical romance novels are rather predictable:

- Two characters fall in love, but then the two people experience a conflict of some sort. They have a happy ending.

- Two characters from very different backgrounds face obstacles on their quest to be together. In the end, they overcome those obstacles and live happily ever after.

- Two characters hate each other at first, but through a series of unexpected, yet bond-inducing events, realize they were meant to be together. And surprise – they get together and live happily ever after or ride off into the sunset as a couple (you get the idea).

Andrew Dai confirms that because romance novels follow this very predictable format, they’re ideal for this purpose: “Girl falls in love with boy, boy falls in love with a different girl. Romance tragedy.”

Deep Learning-Aided Content Marketing

So what does it all mean for online marketers? The Google experiments point to the increasing need to create online content in ways that typical online consumers will find engaging. When applied to the development of Web content, deep learning instantly sources the opportunities, themes and research necessary to determine and match that of the user’s intent. Companies not invested in it will become also-rans.

In the growing field of agile content creation, deep learning is the missing link in a marketer’s goal of creating engaging content. Unlike agile marketing, which recognizes content creation only as part of the complete agile development experience in delivering a product, agile content creation is an acknowledgement that for many users online, content is the experience.

To address that in deep learning-based agile content creation, the content developer has information guided by natural-language patterns at his or her fingertips, updated continuously.

In short, if Google understands human sentiment and drama a little bit better than it did before, you should too. Must-have checklist items like ranking factors, keywords and backlinks increasingly are becoming table stakes in the great online gamble. As marketers develop mobile and desktop strategies, it’s important to think about new ways to connect to your target audience. Google already is.