This post was updated on July 11, 2016.

All aboard! The Google quality train is making its way through the internet once again. This time, the engineer driving this metaphorical train is driven by quality and improved user experience. Even though this is an unconfirmed update from Google, we at Searchmetrics are seeing changes in a number of domains that were previously rewarded by Phantom.

Especially where quality signals are concerned, we’re seeing connections to the work of previous Phantom updates. Therefore, we are unofficially considering the fluctuations to be signs of “Phantom 4,” since clear trends have emerged in our data indicating that Google is devaluing specific areas of domains exhibiting poor quality and experience for users.

To be clear, there is not an easy answer here. While we can observe multiple quality factors (404 error pages, too many ads on the page, etc.) as potential contributions to a decrease in page rankings, truly understanding what constitutes rankings for quality and user intent is a new frontier in search. We’re now scrutinizing the SERPs to determine the more nebulous aspects of Google’s ranking mechanisms at work.

There’s a great quote by Marcel Proust that frames what we’re trying to unearth here. It says, “Discovery consists not in seeking new lands but in seeing with new eyes.”

We have to try to see with new eyes.

As such, there are a few things to keep in mind when Phantom is at the wheel, especially now that RankBrain and other algorithm updates are aboard:

- Phantom is aimed at assessing quality and is focused on user intention. It’s part of Google’s core ranking algorithm, which means the Phantom continuously adapts how it processes quality signals. For webmasters, this means they can expect to see changes at any time, unannounced

- We saw Phantom II back in June 2015 operating on a page level basis – and we are seeing similar patterns again. This means that low quality pages are affected separately from the entire domain. Nevertheless, large amounts of underperforming pages will still indirectly hurt the domain as a whole. The good news is, clear areas of underperformance tell webmasters exactly where to make improvements

According to our data, we’re seeing some recent steep declines in SEO Visibility impacting domains starting in late June and lasting through these first weeks of July. The larger SEO community (e.g. blogs by Glenn Gabe and Barry Schwartz) have also observed fluctuations throughout periods in June and published their observations.

Top Themes for Phantom 4

Below are the top symptoms of Phantom 4 as we have observed them based on the affected domains from our list of Week of July 3rd SEO Visibility Winners/Losers. Keep in mind that it’s still too early to label any related results as the direct effect of a Google update (such as a potential “Phantom 4”), but the data does indicate certain areas where pages on the loser domains are exhibiting poor quality. Here, we’ll attempt to surface some early findings, and offer conjectures as to why these pages may have lost SEO Visibility in such a short timeframe.

Theme #1: Too many ads, too little content

Domain |

SEO Visibility Previous |

SEO Visibility 7/3/2016 |

Net loss |

| cookinglight.com | 165,233 | 119,748 | -45,485 |

This domain, within the recipe and food vertical, is showing a recent, sharp decline in SEO Visibility. The chart above shows how the SEO Visibility of both mobile and desktop devices reflect a simultaneous decline, thereby indicating that this quality update is not device specific. We noted the same pattern occurring on another domain within the same vertical: myrecipes.com (analyzed later).

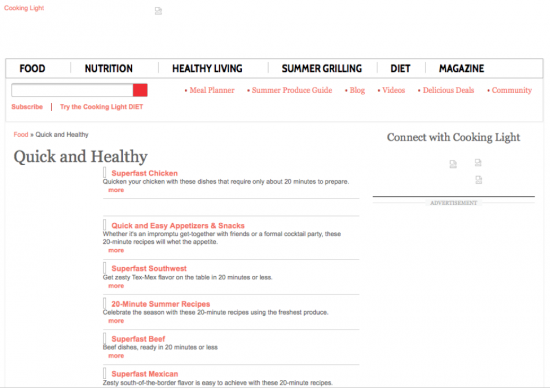

Here’s a quick screen shot of what the /food/quick-healthy page looks like with images disabled…not much content for users, or search engines for that matter. On the plus side, I’m a lot less hungry looking at all those images of avocados.

Theme #2: Pages do not match keyword (and therefore user) intent

Domain |

SEO Visibility Previous |

SEO Visibility 7/3/2016 |

Net loss |

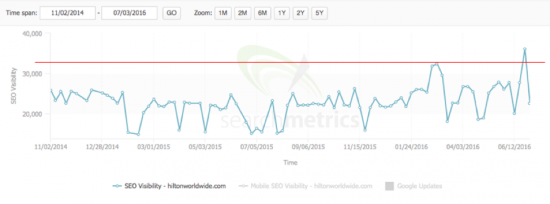

| hiltonworldwide.com | 36,088 | 22,589 | -13,499 |

This is a tricky SEO situation for any enterprise site that owns multiple properties and maintains separate corporate domains from their customer-facing site. Ideally, canonical tags should be used on this version of the Hilton consumer domain, pointing to the root domain in order to avoid confusing search engines as to which domain should rank for the brand term, “Hilton.”

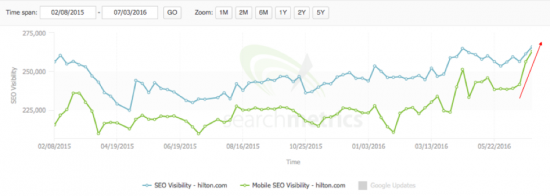

Have a quick look below at the SEO Visibility of the root domain, hilton.com. See what I mean? Both desktop and mobile are increasing in SEO Visibility whilst the corporate domain (above) remains volatile.

Having pages that reflect the intent of keywords is very important for rankings going forward. If the page doesn’t relate to what the user wants to achieve (glean information or make a transaction) that’s an indicator for poor quality that’s recognized by filters like Phantom.

Theme #3: Multiple quality signals at work: page design, layout, and content is lacking

Domain |

SEO Visibility Previous |

SEO Visibility 7/3/2016 |

Net loss |

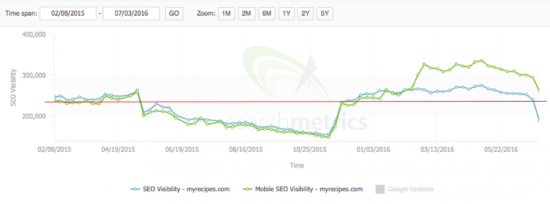

| myrecipes.com | 239,281 | 188,677 | -50,604 |

This domain serves as an example of a larger pattern we’re observing when looking at the domain history over time, as shown below. Some domains, like this one, appear to have been positively affected by previous Phantoms, now appear to be negatively impacted by Phantom 4.

This domain is also in the recipe vertical (similar to cookinglight.com), but shows a steep increase in SEO Visibility during the Phantom 3 quality update in November 2015. In contrast, it now shows a sharp decline during this potential new update, Phantom 4. During the Phantom 3 update, these keywords and URLs moved up to position 3 and 5, respectively:

- “turkey meatloaf” http://www.myrecipes.com/recipe/turkey-meatloaf-0

- “shrimp and grits” http://www.myrecipes.com/recipe/cheesy-shrimp-grits

From what we see now, those same two URLs are not among the pages that have lost SEO Visibility during Phantom 4. Instead, these two URLs are being impacted negatively:

- “chicken salad recipe” http://www.myrecipes.com/ingredients/chicken-recipes/best-chicken-salad

- “sweet and sour chicken” http://www.myrecipes.com/recipe/sweet-sour-chicken-4

What can we glean from this? The main content of the page contains the recipe itself. But perhaps the user experience presented on this type of page is better on another domain? A quick scan of the top organic listings for a search on “chicken salad recipe” confirms the ranking domains offer pages that contain less ads, pictures illustrating the process, and even advice on what type of wine pairs well with this dish.

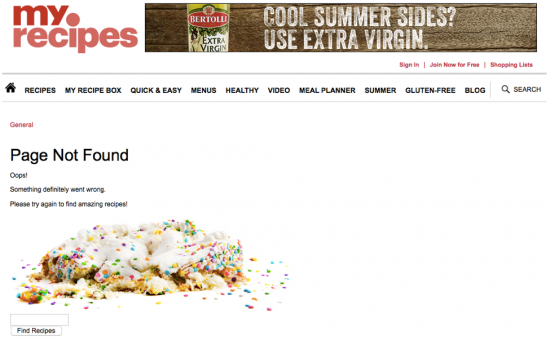

Still, a higher level page might also be impacting rankings. Our Suite data highlights which of the top level directories within a domain contain pages which are currently losing visibility. For this domain in particular, these are /recipe/, /how-to/, and /menus/. Specifically, this page returns a 404: http://www.myrecipes.com/recipe

Ultimately, a combination of several quality factors is plaguing these pages: poor page design, too many ads, and pages with little actual content that is helpful or relevant to the user. But, perhaps to a higher degree, a category level page returning an error is having a larger impact on quality. How many of these pages are being affected is difficult to determine exactly.

Nonetheless, it’s interesting to note this pattern appears on other domains and in unrelated verticals.

Theme #4: Large amount of 404 status codes – error pages

Domain |

SEO Visibility Previous |

SEO Visibility 7/3/2016 |

Net loss |

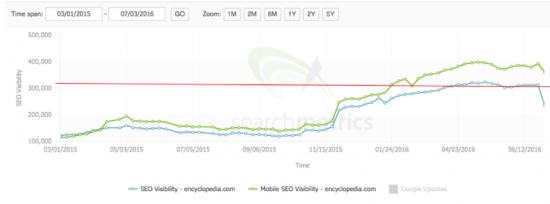

| encyclopedia.com | 310,202 | 233,822 | -76,380 |

Here again, we see a domain with two patterns: first, the domain appears to have benefitted during the Phantom 3 update, but is now being negatively impacted during Phantom 4. Second, the two top level category pages, /topic/ and /doc/ return a 404 (Page Not Found) error.

Is it possible that, with this magnitude of poor user experience, these category level pages carry enough page strength to affect the entire domain in a quality update – even so much as to cause to the domain to suffer from Phantom 4 quality update? Given the multitude of factors involved in SEO, we can only speculate, especially since there appear to be other observable patterns of quality on the page-level which could also be contributing to the overall loss in SEO Visiblity.

Domain |

SEO Visibility Previous |

SEO Visibility 7/3/2016 |

Net loss |

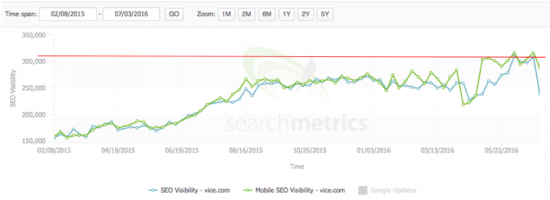

| vice.com | 307,629 | 238,600 | -69,029 |

Different vertical, similar issue. Here we see the Phantom 4 update affecting the Vice domain where a good majority of their category level pages (and subdomains) return a 404 error. What’s interesting is that we still see some pages contained within these directories in the Google index, indicating that the directory as a whole is not affected. That said, it’s certainly not optimal that category level pages – potentially housing the most links and SEO value – return a 404 error:

- www.vice.com/read (redirects to the home page)

- broadly.vice.com/en_us/article

- noisey.vice.com/blog

- news.vice.com/article

How do we stop this train?

You can’t. But, knowing that the Phantom 4 quality update is circulating means taking action on the areas you know are being impacted: user experience and content. Here are a few quick tips to get you started:

- Assess your site’s user signals (few organic visits to certain pages): Identify areas of your domain where users bounce or exit your site. That’s where you need to focus on making improvements to the type of content and user experience you provide.

- Maintain your site architecture: Make sure to crawl your domain regularly and promptly redirect any pages that resolve to 404 errors. Check in on Search Console for increases in 404’s as well.

- Decrease the number of display ads: Ensure that there is a balance between quality content and the advertorial experience presented on the page. Seriously, this isn’t Vegas.

Many of these examples indicate that we’re in the new normal of continuously running algorithm updates. SEO has always operated without official announcements from Google on which updates are running and when, but the fact remains: the data we’re seeing here indicates that there is likely an update at work. While it’s more challenging to accurately distinguish which update is causing fluctuations at any given time, we know that Phantom focuses on improving quality and user intent where content needs to be easily accessible and organized on the page for easy consumption.

Update on July 19, 2016

I wanted to offer a new perspective on the topic after further examining the effects of top level 404 error pages.

Although 404s appeared on several of the analyzed domains, they may not be directly linked to symptoms of Phantom in these cases. Still, this goes against basic SEO architecture and good housekeeping of top level pages, especially internal linking that is directed to these pages. But, it’s likely this has less to do with how Phantom 4 is operating at this time.

Furthermore, when having a deeper look at the domains and pages, we noticed an above average of what we think are spammy-looking links. Having a backlink profile with spammy backlinks is technically nothing new but we’re talking about quality and on a page level. This is still a hypothesis, but a factor we will continue to monitor.

What are your observations about recent fluctuations on your site? Let me know in the comments.